|

Robots don't see the world like humans do, at least not yet. They

don't recognize discrete objects and have little common

sense—that it's better to drive over a bush than a rock, for

instance. Instead of seeing, today's robots measure. They use

a variety of sensors—cameras, laser range finders,

radar—to gauge the shape, slope, and smoothness of the terrain

ahead. They then use these data to figure out how to stay on the

road and avoid obstacles. At least that's the idea, but the DARPA

Grand Challenge showed it's a lot harder than it sounds. Here, see a

slide show of the major measuring techniques used by

the various teams.—Jason Spingarn-Koff

|

|

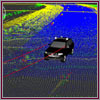

Laser Sensing

Laser scanners, commonly known as lidar (for light

detection and ranging), were the most

common sensor for Grand Challenge robots. A beam of light

bounces off a spinning mirror and sweeps the terrain ahead. By

measuring the time it takes for the beam to return, the sensor

can calculate the distance to objects. Line by line, the robot

builds a simple 3-D model of the terrain in front of the

vehicle. Many teams used several laser scanners to increase

the amount of detail. Team DAD even built a custom sensor with

64 spinning lasers. But laser scanners have limitations: They

see only a narrow slice of the world and have a relatively

short range (60 to 150 feet). The beams also can't detect

colors and may bounce off shiny surfaces, so it's hard to spot

certain hazards (such as a body of water). They're also bad

for stealthy applications, such as those preferred by the

military, because they emit light.

|

|

|

Video Cameras

Video cameras see fast and far—all the way to the

horizon. They can measure the texture and color of the ground,

helping robots understand where it's safe to drive and

alerting them to dangers. And since cameras don't emit light,

they are well suited for stealthy operations. But there's a

serious drawback: it's difficult to use just one camera to

figure out the size and distance of objects ahead. Recent

research by Andrew Ng at Stanford suggests this is possible in

some circumstances—by analyzing road edges,

texture, and converging lines—but this needs more

testing and wasn't used in the Grand Challenge competition.

Video cameras also have limited use at night or in dust storms

or other bad weather, and they can be blinded by bright light

such as the setting sun.

|

|

|

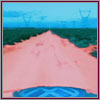

Adaptive Vision

The Stanford team invented a technique called "adaptive

vision," which allows its robot, Stanley, to see farther and

drive faster, even as the road changes over different types of

terrain. Here's how it works: Stanley uses its laser range

finders to locate a smooth patch of ground ahead and samples

the color and texture of this patch by scanning the video

image. It then looks for this color and texture in the rest of

the video image. If a smooth "road" extends about 130 feet

toward the horizon, Stanley knows it can speed up. If the road

suddenly changes, the robot slows down until it figures out

where it is safe to drive.

|

|

|

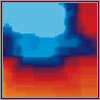

Stereo Vision

Inspired by the way humans see, some teams used two video

cameras in a technique called stereo vision. Princeton,

TerraMax, and Team DAD (in the first Grand Challenge, in 2004)

all tried the technique, with varying degrees of success. In

the technique, two cameras are mounted side by side, and

software measures the slight shift between the two incoming

images. (You can try this yourself by holding a finger at

arm's length and looking at it only with your right eye, then

only with your left eye; your finger will slightly "jump.")

The shifts are then compiled into a "difference map," which

crudely shows the distance of approaching objects (represented

here by different colors). The problem is that objects in the

far distance look largely the same to both cameras, limiting

the accuracy where it matters most: for high-speed driving.

|

|

|

Radar

These sensors use radar (from radio detection

and ranging) to send out radio waves to a target and

measure the return echo. They can see far into the distance,

even through dust clouds and bad weather (which easily foil

cameras and may degrade lidar). The downside is that radar

beams are not as precise as lasers; objects that aren't really

obstacles often appear, confusing the robot. Carnegie Mellon's

Red Team used radar as a second sensor, complementing lidar to

spot large objects far ahead. Carnegie Mellon researchers are

developing a more sensitive unit. Called solid-state

millimeter wave radar, this could be a potent sensor on future

robots.

|

|

|

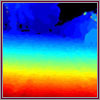

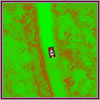

Cost Map

Many Grand Challenge robots used this software technique to

find the road and avoid obstacles. First the program compiles

data from any variety of sensors (laser scanners, cameras,

radar) and builds a map of the terrain ahead. By finding

smooth areas and pinpointing obstacles, the program can divide

the world into areas for driving that are good ("low cost")

and bad ("high cost"). In this image from Stanford, green

represents laser measurements, while black represents unknown

grid cells or no measurements. Red signifies grid cells that

are judged to be not drivable (obstacles), while white

signifies grid cells judged to be drivable (road).

|

|

|

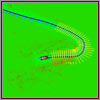

Path Planning

This software program allows the robot to find the best path

from point to point, even as it avoids obstacles. Some robots

use a searching algorithm, a sort of mathematical recipe, to

figure out every possible path, then compare each to the cost

map to find which path is best. They must also factor in how

fast to go and which paths are physically impossible (e.g.,

sharp turns could cause a rollover). As they're driving, the

robots must constantly update their paths to stay on the

racecourse and steer toward the finish.

|

|

|