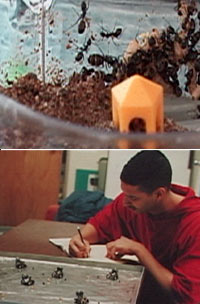

James McLurkin, swamped by a swarm

See any resemblance? McLurkin's ants ... and his Ants

Swarming is just one way his robots are like bees, McLurkin says.

Nobody, not even McLurkin, knows how to program 2,000 robots. But from the look of things, it's only a matter of time.

"I'm on the edge of explored space...."

"There definitely is Zen in there," McLurkin says of his swarm.

McLurkin has always been a big Lego fan.

"We can just sit back and collect our data," McLurkin says. "Mostly."

"It is difficult to articulate to people who aren't in the field how stupid robots are," McLurkin says.

Building robots has always been McLurkin's first love.

"It's just you and your computer and your software until the wee hours of the morning." |

‘Bots and BugsNOVA scienceNOW: When did you build your first robot, and what could it do? McLurkin: It was between my junior and senior year in high school. It was able to drive around. You could program it and tell it where to go. Not to go to a particular location, but to go forward, stop, turn right, like the Big Trak toy of the day. And it could squirt parent-based life forms with its water gun. NOVA scienceNOW: And when did you build your first swarm of robots? McLurkin: My first swarm came when I was an undergrad at MIT. I finished them in 1995. What could they do? Very simple things. They could move towards one another. They could move away from one another. They could pick up food. They could play some fun games like tag and manhunt. They were called the Ants. NOVA scienceNOW: You have your own colony of live ants. How have they helped you in your work? McLurkin: The real ants are great for inspiration. You see how nature has solved certain hard problems, and what problems nature simply hasn't solved because they are not worth solving. For example, ants don't worry about avoiding each other. They simply walk over each other. Ants don't worry about getting lost and dropping things. As long as most ants are doing the right things most of the time, they'll all get to the common goal. NOVA scienceNOW: Do you find yourself poring over your ants for long periods? McLurkin: Kind of. You see how they are moving and what they are thinking about. If they don't like something, for instance, ants will cover it up with dirt. So if I give them some food that they don't like, I'll come back the next day and they will have buried it, which is a pretty clear indication that they don't like that and I should not give that to them again. Another example is when my ants relocate. If scouts find somewhere to live that's better than where they are now, they'll go back and physically pick up another worker and carry her to the new nest location. If she likes it, then she will go back and pick up another worker. The scouts will approach the worker head-on and grab her by their mandibles and just hoist her off the ground. If you watch WWF [the World Wrestling Federation], that's called a suplex—without the dropping part. So if the ants approve of their new home, somehow a group decision is made and they all start carrying ants and young over, and they will all relocate. The process can happen quite quickly once it gets started. You get this exponential building up of ants at the new location. It's amazing how it happens, particularly how the group decision is made. NOVA scienceNOW: You're fascinated by other social insects as well, like bees, right? McLurkin: Yes. When I started on this swarm project, I visited a biologist at Cornell, Professor Thomas Seeley. He's done a bunch of really good work studying honeybees. I think it would be very exciting to test his research on robots, to see if the robots can do the same things as bees can. They ought to be able to do similar things. They have similar sensors and have access to the same kind of information. They have the same kind of physical limitations, especially in terms of their communication with each other. Bees don't have cell phones. They don't have GPS. They are not sending e-mail to each other. They are only communicating to nearby bees. And the robots have the same kind of communication constraints, same kind of mobility. Bees pretty much live in a two-dimensional world. They do fly but they don't fly to 40,000 feet. They stay pretty close to the surface of the Earth, so you can imagine that they look kind of like a bunch of robots rolling around on the ground. NOVA scienceNOW: So how does the complexity of behavior in your current robot swarm compare with that in an ant colony or swarm of bees? McLurkin: Oh, man, 0.0001 percent. The kind of complexity that ants and bees exhibit is mind-boggling. There's a lot of software in those insects, and it has been very heavily tuned over the past 65 million years. It's very complicated. Some of it is there for things that only happen once every 1,000 years. Every 1,000 years there's a big flood, say. The ants have to have software to deal with that, because 1,000 years on an ecological timescale is all the time. Coming in handyNOVA scienceNOW: So the "spiders" in Spielberg's movie Minority Report—a swarm of tiny robots that search an entire apartment building identifying people by scanning their eyeballs—is that kind of swarm behavior not entirely fanciful for our future? McLurkin: No. No. The government is very, very interested in swarms of robots that can be deployed into bad places looking for bad people doing bad things with bad chemicals. If you were in the Washington, D.C. area this summer, you saw the Brood X cicadas that were everywhere. Imagine a swarm of robots that you could infiltrate a city with. You might be able to find Osama. The government is very interested in stuff like that, and, no, it's not all science fiction. NOVA scienceNOW: What other potential applications do you see for swarms? McLurkin: Well, I'm not really into the military-style applications. I'd love to go to Mars with robots. Right now we have two robots on Mars. What if we had 2,000? We could cover a much larger area. But we can't communicate with 2,000 robots from Earth. It takes too long to get a signal to Mars. So they would have to be autonomous. They would have to work on their own and cooperate and communicate. But nobody has any idea how to program 2,000 robots right now. So I'm in an area where you can make a lot of strides. One of the examples I've been talking about lately is earthquake rescue. Humans are completely inadequate to deal with the problems that happen after an earthquake. Either we are too weak in that we can't pick up the rubble, or we're too big and we can't go through the rubble. Imagine a group of robots—1,000 or so cockroach-sized scouts. They're pretty simple. Their job is to look for heat, life, motion, sound—things like that. And then they coordinate. Once they find things they set up a network and relay information back out. Then you dispatch a bunch of rat-sized structural-engineer robots. These are the brains of the operation. They go through and analyze the structure and solve the reverse Jenga puzzle of how to pull pieces off survivors to get them out. Then you bring in a bunch of brontosaurus-sized heavy-lifting robots. They're not very bright but they sure are strong. They lift the pieces up as directed by the structural-engineer robots to get the people out. NOVA scienceNOW: Cool. So what, in essence, are your current robots saying to each other? Is it like "I found the edge of the territory. Tell the others?" McLurkin: Exactly. The robots communicate using very, very simple things. Each robot typically says maybe 80 bytes of information to another robot, four times a second. Eighty characters is about one line of text on a printed page. They say things like, "I'm on the edge of explored space. I need more robots to help me explore this new room over here." They say things like, "I am one communication hop away from the chargers." If you want to find the chargers and you are five hops away, you can move toward a four-hop robot and then move toward a three-hop robot and a two-hop robot, etc. etc. Eventually you will get close to the robots that are right next to the chargers. Then you can see the chargers and go right in. They say things like, "I'm robot number five of seven. I'm recruiting you to be robot six of seven. And it's your job to recruit robot seven of seven." If you're doing, for example, follow the leader, you can share information as to who needs to recruit whom. SwarmingNOVA scienceNOW: You've written that local interactions among individual robots produce global behavior, "sometimes unexpectedly so." Can you give an example of unexpected behaviors that arose? McLurkin: There are two things here. There's unexpected in that, "Look, there's emergent intelligence." Something amazing has happened that I didn't even know has happened. The robots are doing amazing things. There's also unexpected in that, "Look, that is not what I expected to happen. There must be an error in my software." I'm describing two reactions to the same phenomenon. I don't attribute emergent behaviors to amazing insights and interactions among the robots. I attribute them to me as the engineer not understanding the system. One example of an emergent behavior that I was not anticipating: I was trying to get the robots to spread evenly throughout their environment, trying to have them move themselves so that there were robots everywhere in the whole room, leaving no empty spaces. And I made an error in the program; I flipped some signs in the equations. And when I ran the software, the robots formed into little clumps. Essentially they made polka dots on the floor, which was very entertaining after the fact. At the time it wasn't so entertaining, because they weren't supposed to do that. But it was really very cute retrospectively. I wish I had taken pictures of it. NOVA scienceNOW: Do you feel a closer affinity to the swarm as a whole than you do to, say, an individual robot? McLurkin: On an emotional level, individual robots are more appealing because you can look at one—maybe it's robot #73—and watch that robot run around and wonder, "Hunh? What is that robot doing?" You can identify it and personify it and get into it. But the whole magic is at the swarm level. It does take some practice. You've got to learn how to twist your neck in the right direction to get a feel for what the whole swarm is doing and what you told the whole swarm to do. There definitely is Zen in there. There's a level of using the Force. There's a—what's the word?—gestalt. There's a something! NOVA scienceNOW: A synergy? McLurkin: Synergy, yes. But that doesn't describe what you the user needs to employ to understand what is happening. You need to be very laid back and develop a very good qualitative feel for what the swarm is going to do. NOVA scienceNOW: You mean intuition. McLurkin: Thank you! Intuition. And that has taken a long time. It's very important to trust that and be able to have access to that, because intuition often operates on a subconscious level, and it affects your design decisions. It affects what problems you are trying to solve. It affects how you structure your software. It affects how you structure your problems. My hope is that somewhere in the intuition are some of the answers to the problems I'm trying to solve. If I'm able to consistently make the robot do something that is correct, then at some level I must understand something about how this swarm works. The trick is for me to be able to get at that knowledge and articulate it. Once I can say it and write it, then I can study it very carefully and ascertain whether or not it is actually correct. Then publish about it and become famous, write lots of papers, become a professor, etc. etc. NOVA scienceNOW: Right. So what happens if one or more of the robots in your swarm fails? McLurkin: The whole advantage of the swarm is that failures of individual robots do not largely affect the output of the group. The magic word for that is what's known as a distributed system. The system is distributed amongst many individuals. So if you take the system apart piece by piece, it will still function. The opposite of that is a centralized system, where if you eliminate the centralized controller the whole thing falls apart. NOVA scienceNOW: You use things called distributed algorithms to program your robots. McLurkin: Exactly. A distributed algorithm is a piece of software that runs on mini computers. An example of this is the sharing software, as opposed to Napster. Napster is actually an example of a centralized system, which is why the lawyers were able to shut it down, because they had someone to sue. With something like Kazaa, it is spread out all over the Internet. You can't sue it. There is nothing to sue. Ants and bees, as you might imagine, are very distributed systems, where each individual system is running its own software, has its own sensors, makes its own decisions. NOVA scienceNOW: Your robots also rely on what you call Robot Ecology. What's that? McLurkin: In the iRobot Swarm [iRobot is a Burlington, Mass.-based robot manufacturer for which McLurkin works], there was a lot of very serious engineering that we had to overcome in order to get to the point where we could just sit down and write software, which is where we are now. And the engineering that we had to deal with was designing robots that you never had to touch. Any time you have to touch one robot, even something simple like turning it on, you will most likely have to do the same thing with all 100 of them. So we developed this mantra—"robots in the glass box." You can see them but you are not allowed to touch them. We had to design all this support. We call it a swarm extrastructure, as opposed to infrastructure. It's a play on words. So we could go about our work, and the robots could take care of themselves, things like charging, which you alluded to; remote power on; remote power off; remote programming; some remote debugging; ability to get data off the whole swarm. There is a lot of software and a lot of hardware that let the robots do their thing, and we can just sit back and collect our data. Mostly. Around the bendNOVA scienceNOW: So what's our collective future with robots? Will they soon be ubiquitous in our lives, even swarm robots? McLurkin: Well, many of the tasks that robots are good for and multiple robots can do even better—especially things that involve searching or coordination or security or mapping—are dangerous, dirty, and dull, things that people don't want to do or find too boring to do. But the best application for robotics has yet to make itself clear. There are two reasons why this is the case. The technology is very, very new. The field is at best 60 years old. It's not clear exactly what robots are really going to be good at and what applications are really ideal for them to do. (Except for going to Mars: it's a lot of fun but very dangerous, very expensive, very hard to get people there, so robots are great for Mars.) The other problem with this thing is that we don't understand the nature of intelligence at all. Intelligence in general is very, very complicated. We don't even know what we don't know. We can't even ask the questions to begin to do the research to understand intelligence. We can't even define intelligence. Am I intelligent? I don't know. I might be. I might not be. Are ants intelligent? I don't know. Is this Tupperware box intelligent? Well, it might be. The problem of trying to get robots to act intelligently and do intelligent things.... It is difficult to articulate to people who aren't in the field how stupid robots are and how stupid computers are and how little they can do without very precise human control. "Little," actually, is an overstatement. How they can do nothing without precise human control. NOVA scienceNOW: I remember Steve Squyres, the head of the current Mars mission, saying that his rovers are way dumber than your average laptop. McLurkin: Oh, yes. And your average laptop is way dumber than your average bacteria. Yet robots can still be useful. My vacuum cleaner is a robot. It bounces around my apartment and does a nice job cleaning. It has limitations. It will get stuck. I have to go find it when I come home some days. But as long as I can accept its limitations, it will do what I have asked it to do. Our cars are robots, essentially. People don't think of them like that, but most cars have five or six computers in them, all networked, all talking. If you buy an expensive car, you might get into the double digits of computers. Airplanes are very, very robotic. Autopilot is a classic example, where the robot is flying the plane. Robotics are starting to come into daily life disguised as cell phones and MP3 players and TiVos and things that people don't associate with robotics. Computers are taking over in that kind of way. There is an explosion right now in what are called embedded systems, where computers are built into common things and are literally everywhere. Everything we get has a computer in it. If it has power connected to it, you can be pretty sure that there is a computer in there—like microwaves, dishwashers, light switches, clocks, etc. Very, very exciting research is happening right now to figure out what can happen if all these simple computers can start to talk to each other. NOVA scienceNOW: Like in Terminator, where the world's computers become hyperaware? McLurkin: I've got a series of slides that address this exact issue. The problem is that Hollywood has done robots a disservice in a bunch of different ways. It makes very complicated tasks seem easy—people can build robots that do all these amazing things. In reality, we are decades, maybe even centuries away from things like that. And there are only three main plots. First, there's the Frankenstein plot, which is society's view on robots. There's the Tin Man plot, which is a robot trying to attain humanity. And then there is the Terminator plot, which is robots taking over the world. The way I address this in the talk is, the best way to avoid giant killer robots is to not vote for people who want to build giant killer robots. Robots, by their nature, are a technology. They are neither good nor bad. Splitting of atoms is a technology. Cars are a technology. More people die in cars than—pick whatever statistic you want. Yet no one argues that cars are taking over the planet. So that is not something that I really worry about. It's probably thousands of years away anyway. We have more things to worry about now with normal, conventional weapons, with people who want to kill each other. Life as a robot guyNOVA scienceNOW: Can you ever see yourself not working with robots? Will that time ever come in your career, or are you always going to work with them? McLurkin: I love building things. I have always loved building things. I love making things work. I love being able to write software and then watch that software make robots move and make the lights blink and the speakers go and things like that. Robotics is the highest form of that art, the art of electromechanical software systems. So I'll probably be here for awhile. NOVA scienceNOW: Is the kind of work you do something you share with others, or do you work autonomously? McLurkin: Both. You can never get to the next level on your own. You have to have comrades. You have to have counterpoint. You need people to inspire you. You need people to tell you, "You are being a moron." You need oversight. You need advisors and people who are more senior than you to say, "Yeah, people tried that in the '50s in Russia and it's not going to work. Try this." NOVA scienceNOW: Have you had a mentor, someone to really get you fired up? McLurkin: I can't say that I've ever really had a mentor per se. I have a lot of people senior to me who have taken a lot of time to get me moving in correct directions and tell me when I'm going in the wrong direction. I've got a lot of colleagues, people who are my age and my stature, whom I can bounce ideas off of and whom I can work with. That's maybe a third of it. Then there's a large portion of it where I need to be chained to my desk, slaving over an algorithm with paper and pencil or wired into the robots, typing in software and watching the robots run. A lot of that really cannot be spread over multiple people. It's just you and your computer and your software until the wee hours of the morning. My ants are very active at this time of night too, so they can keep me company. NOVA scienceNOW: For all the aspiring robot engineers out there, what kind of mind do you need for this kind of work? McLurkin: The most important thing for any kind of work is to enjoy it, to have passion. To be a hands-on engineer, you need to have a mind that really likes building, that likes creating, that likes solving problems, that likes to take things apart, understand them, and get them back together and have them still work. NOVA scienceNOW: Have you learned anything from the high school students you teach? McLurkin: Lots and lots. They never fail to surprise me in terms of what they bring to the classroom and how they approach things and what they understand and what they don't understand. I'll spend three hours working on something that is 15 minutes of lecture that I think will cause a lot of difficulty, and I'll spend perhaps five minutes on things that I think are easy. When I go to teach, I discover the exact opposite, that the thing that I've prepared lots of examples for and thought through very carefully, they all get that. The thing that I didn't think needed to be explained because it was so easy, that's where the questions come. The real joy, though, is—well, there are two of them. When I see that they get it and can take it and run with it, that's really a lot of fun. And it is also really nice being surprised when they come up with questions or solutions or examples that you never thought of, because they're coming from a different world than you are. Not just function anymoreNOVA scienceNOW: Last question: what's the most exciting thing you've heard about lately in your field or not in your field? McLurkin: SpaceShipOne. SpaceShipOne is really cool. The fact that it's just a stick-and-rudder plane; it's not computer controlled. The guy's actually flying that thing all the way up and all the way down. And the problem that they set out to solve is mind-bogglingly hard. I mean, you have to get this thing in space, land it, tear it down, prep it, and get it back up. And it worked. Burt Rutan [SpaceShipOne's designer] is obviously brilliant. He's been called brilliant by people far more brilliant than I am, so clearly he must be brilliant. The other thing about SpaceShipOne is that it looks like it ought to. It looks like a space ship. It looks crazy and wild. NOVA scienceNOW: All Rutan's creations are. There is such a wild look to them. McLurkin: That's where he goes. He's got the combination of formidable engineering talent and a design aesthetic. And design is something that I've been spending a lot more time thinking about in the past two or three years, getting in touch with my right brain, my little, impoverished right brain. I just gave a talk at Honda. I talked about engineering creativity. There is a double entendre there: creativity for engineering, and how do you actually make creativity, the act of engineering creativity out of the things around you. And one of the things that you have to do is really study fine design.

You could have made SpaceShipOne look like a breadbox or something equally ugly

and still have it perform. But he chose not to. He chose to make it elegant and

beautiful and futuristic and crazy, which describes what the product is. The

product is absolutely insane. I like things that are absolutely insane.

|

“Imagine a swarm of robots that you could infiltrate a city with. You might be able to find Osama.” “The whole magic is at the swarm level.” “Am I intelligent? I don’t know. Is this Tupperware box intelligent? Well, it might be.” “I like things that are absolutely insane.” |

|||||||||

|

Interview conducted on December 16, 2004, and edited by Peter Tyson, editor in chief of NOVA online |

|||||||||||

|

© | Created January 2005 |

|||||||||||